The case for Fast Transforms as Averaging Machines

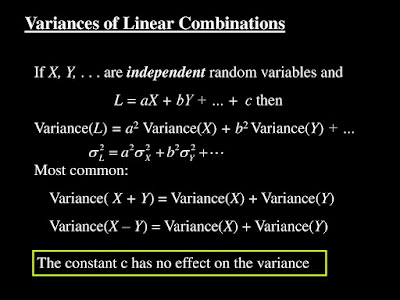

Are Fast Transforms like the FFT and Walsh Hadamard transform averaging machines? Certainly there is an all sum term such as A+B+C+D+E+F+G in the diagram which can be considered an average especially after vector length renormalization where all the output terms would typically be multiplied by 1/sqr(width), 1/sqr(8) in this case, to leave vector magnitude unchanged by the transform. For a different input, say {+1,+1,-1,-1,-1,-1,+1,+1] the output term A+B-C-D-E-F+G+H becomes 1+1+1+1+1+1+1+1. For that input pattern that particular output term becomes an averaging operation. Degrees of freedom For standard averaging of n values many different combinations of values can result in the same average. There are many degrees of freedom. As you start demanding more and more output terms of a fast transform be particular values the degrees of freedom in the input shrink. Until, when you demand all the outputs be particular values, then only one exact input vector will give that result. To g...