Subrandom Projections

Subrandom Projections

Performing random sign flips on natural data like images before many types of fast transform such as the FFT or WHT gives Gaussian noise outputs.

Essentially the sign flips convert the input data to a collection of random variables that then get weighted, summed and differenced by the fast transform giving Gaussian noise by the Central Limit Theorem.

Another way of stating the situation is that random sign flips combined with fast transforms create Random Projections (RPs) of the input data.

While such RPs have many uses there are some disadvantages such as the complete loss of direct rotation, scaling and translation (RST) information about objects in the orignal image.

It would be nice if some of that RST information was kept along with some of the nice information distribution properties of Random Projections.

One solution to the problem is to use subrandom (low discrepancy) numbers to decide the sign flips.

For example, Additive Recurrence based Subrandom Numbers are a good choice.

|

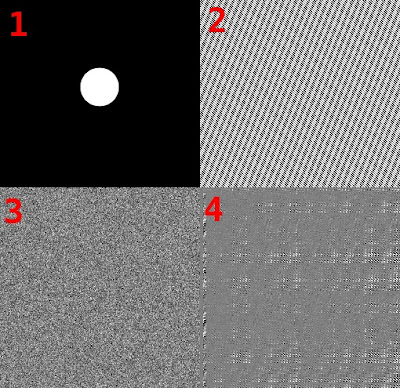

| 1. An input image. 2. Subrandom sign flips. 3. A random projection of the input image. 4. A subrandom projection of the input image. |

Some example code:

https://editor.p5js.org/siobhan.491/sketches/kzJwiLxUD

Use Cases

A possible use case is as cheap alternative to convolution in the first layer of an artificial neural network or for any application where you want to distribute the input information while still preserving location and RST information.

A better example with a bit more sub-randomness: https://editor.p5js.org/siobhan.491/sketches/0_enDef3K

ReplyDeleteExample with additive recurrence and xor shift: https://editor.p5js.org/siobhan.491/sketches/0_enDef3K

ReplyDelete