Switch Net 4 (Switch Net N) Combine multiple low width neural layers with a fast transform.

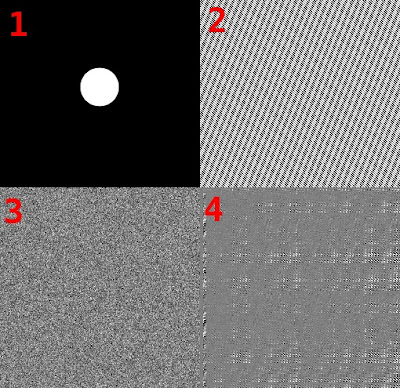

Switch Net 4 Switch Net 4. Random sign flipping before a fast Walsh Hadamard transform results in a Random Projection. For almost any input the result is a Gaussian distributed output vector where each output element contains knowledge of all the input elements. With Switch Net 4 the output elements are 2-way switched using (x<0?) as a switching predicate. Where x is the input to the switch. The switches are grouped together in units of 4 which together form a small width 4 neural. When a particualr x>=0, the pattern in the selected pattern of weights is forward projected with intensity x. When x<0 a different pattern of forward selected weights is again projected with intensity x (x being negative this time.) If nothing was projected when x<0 then the situation would be identical to using a ReLU. You could view the situation in Switch Net 4 as using 2 ReLUs one with input x and one with input -x. The reason to 2-way switch (or +- ReLU) is to avoid ea...